UTF-8

Unicode is a table of thousands to millions of foreign characters that are not represented by ASCII. Since ASCII can’t represent characters in all languages, Unicode is used to identify these characters.

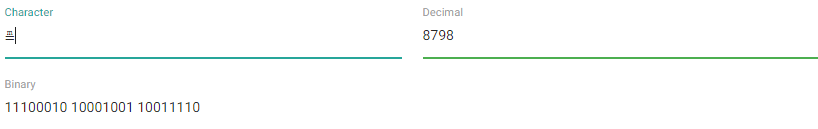

Unicode uses UTF which stands for 'Unicode Transformation Format'. Each character has a unique number assigned to it to make identifiable to the computer. For an example... The number 65493 is the decimal that translates to: ᅰ .Unicode its self is not a type of representation but a character set. Unicode uses many different encoding schemes/methods called UTF's. One UTF is UTF-8 which uses either 1,2,3 or 4 bytes to represent a character and is flexible. It is also the most popular UTF encoding system used.UTF-8 is a variable length encoding scheme for Unicode. The larger the number that the character is represented by the more bits that it needs to represent the character. I used an interactive on the computer science field guide to turn a Japanese character in to its number its represented by and what it would be in UTF-8…

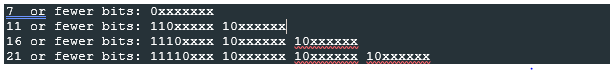

As you can see at the beginning of the binary number above it has 3 ones and a 0 before the rest of the binary number. UTF-8 does this so we know it uses three bits of 8 to represent the character, the other two sets of 8 have a '10' before the code so we know that it follows the first set of 8.

If the character only needed two bits of 8 to represent it, it would have only two ones at the beginning and always one 0 and one set of 8 after it with a '10' at the beginning.For an example the character 㣳 which is a Chinese character, turns in to the number 14579 which is 00111000 11110011 in binary code. Since its 16 bits long it will probably need a three byte pattern, which is three groups of 8 when you add the 1's and 0's needed to use UTF-8. You can use the template below to find out how the symbol is represented, and in this case, it is, 11100011 10100011 10110011 in UTF-8.